Siemens EDA Andy Meier

Andy Meier is a Principal Product Manager within the Hardware Assisted Verification Division at Siemens EDA. He has 20+ years of experience in the semiconductor industry, specifically in the design and verification space. He has worked as a verification lead at both start-up and large enterprise … More » The Veloce Ecosystem: Applications targeted to solving end user challengesJuly 15th, 2024 by Andy Meier

In the rapidly evolving semiconductor and electronic design world, hardware-assisted verification (HAV) has become an indispensable part of the design process. The use of hardware platforms like emulators and FPGA-based prototyping systems to enhance the verification and validation process, ensure designs meet their specifications efficiently and effectively. But what about the end users’ challenges and use cases? What tools and technologies are available to help enable a more efficient verification/validation environment or help with verification closure? What about teams who are tasked with HW/SW Co-verification tasks? Do they have the necessary tools and applications to get their job done? I think asking these critical questions and examining the use cases is important to providing a comprehensive solution that addresses the verification and validation team’s needs. To help understand how Siemens EDA is solving this challenge, let us delve into some key use cases of HAV, enabled by Veloce Apps.

Software development, debug, and validation Read the rest of The Veloce Ecosystem: Applications targeted to solving end user challenges The Rise of Custom AccelerationJune 13th, 2024 by Vijay Chobisa

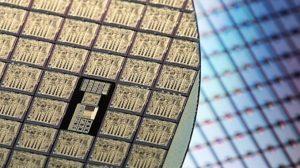

A trend sweeping through chip design is changing the traditional markets for CPUs and GPUs. Along the way, it is accelerating the number of design starts for artificial intelligence (AI) and building chips known as custom accelerators. The custom accelerator trend is driven by system companies becoming semiconductor companies not relying on other companies to build their chips. They do most of the chip design in house to control the ecosystem, roadmaps, and time to market, adopting a different perspective. A good example is Apple. It’s designing its own iPhone and Mac chips now and they are not the end product. Their end product is a system that includes peripherals and software. In today’s landscape, both semiconductor and system companies are racing to make announcements about their own custom accelerators in a vibrant and growing market segment. A custom accelerator is an ASIC, or a chip dedicated to a specific function. It is not a generic CPU or GPU. Nor is it an application serving a broad market. Instead, it is a well-defined, custom-designed accelerator for a specific AI or machine learning (ML) function.

The number of companies making AI or ML chips are generating requirements for loads of verification of big systems because these chips are large and need to run massive workloads and software. The growth is in many areas, not just one. Add hyperscalers as another category not relying on traditional semiconductor companies. All are making their own chips where the size of the workload they run are perfect fits for an emulation and prototyping platform. Facing a New Age of IC Design Challenges Part 2May 30th, 2024 by Jean-Marie Brunet

In an industry accustomed to incremental change, Veloce CS is a departure because it is a complete three-in-one system, a development that Ron Wilson, a longtime technology editor, explores with Brunet in this 2 part blog. Wilson: What is Siemens’ value proposition? Brunet: Low cost of purchase and operation, seamless fit into the datacenter and shareability define the value proposition for the Veloce Strato CS and Veloce Primo CS systems at the enterprise level. Also, high performance and fast model compiles, scalability to 44-billion gates and congruence—commonality of RTL models, operation, and databases for the two systems extended to include the at-speed proFPGA CS system. One command language. One design model. One database. The Veloce CS system meets the challenges of this leading-edge designs generation and the next. The technology we use to build the Veloce CS system changed since our previous generation of systems. FPGAs in Veloce Primo CS and Veloce proFPGA CS prototyping systems and the custom programmable chips in the Veloce Strato CS emulation system have higher capacity, greater speed and lower power consumption. We exploit 2.5D multi-die module technology and optical interconnect.

Brunet: Although the three systems are united by common user interface, RTL models and database, each must meet the demands of its role in the design process. Each has distinct attributes and is implemented in a different way. Capacity is a requirement because of enormous gate counts. Emulation and enterprise prototyping systems must be able to hold the entire compiled RTL model for the chip to observe real workload execution. Veloce Strato CS and Veloce Primo CS offer fine-grained, plug-in scalability from entry-level up to the equivalent of 45-billion gates through advanced ASIC processes and, for prototyping, advanced FPGAs, use of high-speed interconnect, and a modular architecture. Speed is needed to exercise an entire chip design and execute real workloads. Veloce Strato CS exploits its new ASIC and interconnect. Veloce Primo CS uses advanced FPGAs and interconnect to give it execution speed greater than Veloce Strato CS. Large models also require fast compilation. By developing our RTL model compilers in parallel with the internal architectures of Veloce Strato CS and Veloce Primo CS, we sped up compilation times and avoided problems that slow compilation. Recompilation will be fast when the RTL model needs to change. Another need is fast, understandable trace and trigger operation, reflected differently in each. During emulation, designers are typically interacting in detail with the RTL, observing logic signals. Veloce Strato CS makes all signals in the model available to trace, trigger or breakpoint commands. FPGA-based Veloce Primo CS and Veloce proFPGA CS systems require access to individual signals to be specified when the model is compiled. Software developers, who typically interact with workload source code and variables, get the added speed of the FPGA-based architecture in exchange for less ways to change their access to logic-level signals. The processes of compiling the RTL model for Veloce Strato CS and Veloce Primo CS are nearly identical. A switch directs the compiler to one system or the other while the model stays the same. Veloce proFPGA CS uses the same RTL model. Congruence ensures teams can share a single RTL model to work together or separately and communicate efficiently. An RTL model will compile to produce the same behavior in each system at different execution speeds and levels of observability, even though the compiled code will be different for each. The same user command will produce the same result on Veloce Strato CS and Veloce Primo CS and on Veloce proFPGA CS when the context makes sense. The Veloce CS system features directly relate to the needs of design teams because enterprises need the ability to share naturally and easily between members of the same project and across enterprises. Software configurability is important, too. Different teams work on different RTL models of different sizes, a given for emulation systems. Configurability via software comes at a cost for enterprise prototyping systems. The speed of the links between FPGAs is critical to overall system performance. The fastest links are hard-wired or are pluggable cables. Both inhibit flexibility. Allowing a design team days of access to an enterprise prototyping system in a remote datacenter to reconfigure cables is difficult for logistical and security reasons. Granularity is an issue for energy and capital efficiency. Veloce Strato CS and Veloce Primo CS platforms drop seamlessly into enterprise datacenters and comply with footprint standards for datacenter racks using standard cabling conventions for power and networking. Veloce proFPGA CS is physically smaller than enterprise systems and is designed for benchtop use in a lab as well as rack based for datacenter with the hexa blade offering. Wilson: How can our readers get more information? Brunet: I invite them to download the Veloce CS System whitepaper. Facing a New Age of IC Design Challenges Part 1May 16th, 2024 by Jean-Marie Brunet

In an industry accustomed to incremental change, Veloce CS is a departure because it is a complete three-in-one system, a development that Ron Wilson, a longtime technology editor, explores with Brunet in this 2-part blog. Wilson: How do you describe the Veloce CS system?

Wilson: Why now? Brunet: We recognized the discontinuity triggered by the convergence of AI-related hardware, software defined product, new process technology and the emergence of chiplet-based systems. We responded by developing three economical, scalable systems that directly address the needs of design teams. Our goal with the Veloce CS system is to be the instrument to create the next-generation advanced ICs and multi-die modules bringing new levels of productivity to design teams and new levels design and schedule integrity. We envision a new value proposition for IC vendors, IP houses or systems developers who will transform these advanced IC designs into earnings growth. Read the rest of Facing a New Age of IC Design Challenges Part 1 The Strategic Advantage in the Hardware-assisted Verification SegmentMay 9th, 2024 by Jean-Marie Brunet

Welcome to the Siemen hardware-assisted verification blog. Technologies in this blog include software-based tools for architectural design and verification, focus-based applications, hardware emulation, and FPGA prototyping. EDA is generally a software-centric product space. So why does a hardware-oriented vendor like Siemens have a strategic advantage when it comes to chip design and verification? Because we design and verify our own chip for the Veloce Strato CS emulator.

In addition, the Veloce hardware-assisted verification portfolio is expanding rapidly and has taken a primary role in the pre-silicon verification process. It is now an integrated system of software-based tools for architectural design and verification, focus-based applications, hardware emulation, and FPGA prototyping. The Veloce portfolio is the perfect example of designing a chip targeted for a specific application through our hardware emulator known as Veloce. We design and verify Veloce’s Crystal chip and use the same tools we are offering customers. This makes us intimately familiar with their challenges because we are exposed to the same problems that they are, specifically how big chips get designed and verified to meet time-to-completion schedules. Every new generation of our chip gets verified by our current hardware emulator generation, giving us insight into our customers’ challenges and credibility when we speak to them, as well as an insider’s understanding of the trends. Since we design complex devices, we know the verification trends because we’re going through them ourselves. It’s an important consideration for companies evaluating their HAV providers. We see firsthand that verification has changed because the requirements have changed dynamically. It is no longer about making sure the chip is working. Other criteria are equally or more important now. It’s knowing what kind of verification is needed or validation may not be enough when building a chip that differentiates us. After all, our designers are no different than AI accelerator chip designers. Our designers make sure we have chips that are deliverable, whether it’s for our emulator or for a customer’s system. In addition to chip complexity and size, things have changed. For us, it’s the importance of yield that directly impacts margins. Our designers continually refine our processes to improve yield and consume less power. If our chip, system or emulator is going to a data center, they need to consider that the data center charges by the power plug and not by how much space is being consumed. That means power is important and the reason our system is popular with chip designers and used to verify 15-billion chip designs and larger. Speed is important and our HAV runs their workload faster. Hardware-assisted verification is an important market segment and growing tremendously because trends are accelerating and not slowing down. Intractable chip design complexity, size and performance challenges mean this segment will continue growing for the next five years and beyond. To learn more read our latest white paper, Facing a new age of challenges in IC design |

|

|

|||||

|

|

|||||

|

|||||

Wilson: How do you differentiate between the three?

Wilson: How do you differentiate between the three?  Brunet: The Veloce CS system is architected for congruency, speed and modularity and supports design sizes from 40-million gates to designs integrating more than 40+ billion gates. It executes full system workloads with elevated visibility and congruency, enabling teams to select the right tool for a task’s unique requirements for faster time to project completion and decreased cost per verification cycle.

Brunet: The Veloce CS system is architected for congruency, speed and modularity and supports design sizes from 40-million gates to designs integrating more than 40+ billion gates. It executes full system workloads with elevated visibility and congruency, enabling teams to select the right tool for a task’s unique requirements for faster time to project completion and decreased cost per verification cycle.