EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. Samsung’s 3-nm GAA in production; training large NLP models on a single Cerebras device; reducing metal line resistanceJuly 1st, 2022 by Roberto Frazzoli

Quick updates on the impact of Ukraine war. Global exports of semiconductors to Russia have reportedly slumped by 90% due to export controls. And U.S. Commerce Secretary Gina Raimondo has reportedly threatened to “shut down” China’s SMIC foundry if it is found to be supplying chips to Russia. “We will shut them down and we can, because almost every chip in the world and in China is made using U.S. equipment and software,” she said. Moving to new fab updates, Taiwan’s GlobalWafers will reportedly invest $5 billion on a new plant in Sherman, Texas, to make 300-millimeter silicon wafers, switching to the United States after a failed investment on Germany’s Siltronic. Samsung’s 3-nm GAA in production Samsung Electronics has announced that it has started initial production of its 3-nanometer process node applying its Gate-All-Around (GAA) transistor architecture called Multi-Bridge-Channel FET (MBCFET), enabling a supply voltage reduction and a higher drive current capability. Initial applications are targeting high performance, low power computing, with plans to expand to mobile processors. According to the company, Samsung’s proprietary technology – which utilizes nanosheets with wider channels – allows higher performance and greater energy efficiency compared to GAA technologies using nanowires with narrower channels. However, channel width in Samsung’s 3nm GAA technology can be adjusted to obtain various power/performance combinations. Compared to 5nm process, Samsung claims that the first-generation 3nm process can reduce power consumption by up to 45%, improve performance by 23% and reduce area by 16%; the second-generation 3nm process is expected to reduce power consumption by up to 50%, improve performance by 30% and reduce area by 35%.

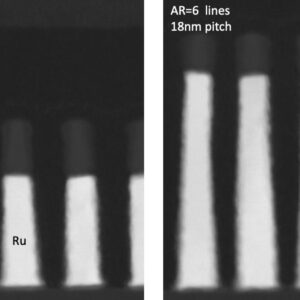

EDA updates In two separate announcements on June 28, both Cadence and Synopsys unveiled new solutions tailored to Arm-based mobile applications, for a range of processors including the Cortex-A715, Cortex-A510 and Cortex-X3 CPUs and the Mali-G715, Mali-G615 and Immortalis-G715 GPUs. Keysight has introduced the new PathWave Advanced Design System (ADS) 2023, an integrated design and simulation software for the radio frequency and microwave industry. Cadence has announced Xcelium Apps, a portfolio of domain-specific technologies implemented natively on the Cadence Xcelium Logic Simulator kernel, claiming up to a 10X regression throughput improvement. Toshiba has made available highly accurate G2 SPICE models for a range of its power devices – the low voltage MOSFETs (12V-300V) and medium to high voltage MOSFETs (400V to 900V) – to simulate transient characteristics more accurately. Intel Foundry Services (IFS) has launched its IFS Cloud Alliance to ensure that EDA tools are optimized for the cloud and for Intel’s process design kits. Initial members of the program include Amazon Web Services, Microsoft Azure, Ansys, Cadence, Siemens EDA and Synopsys. New Risc-V specifications Risc-V International has announced its first four specification and extension approvals of 2022: Efficient Trace for Risc-V (E-Trace), Risc-V Supervisor Binary Interface (SBI), Risc-V Unified Extensible Firmware Interface (UEFI) specifications, and the Risc-V Zmmul multiply-only extension. Training a 20-billion parameter AI model on a single Cerebras device Well known for its gigantic wafer-scale AI chips, Sunnyvale-based Cerebras has announced the ability to train models with up to 20 billion parameters on a single CS-2 system – which is based on the company’s second-generation chip, the 2.6 trillion transistor, 850,000-core WSE-2. Natural language processing is the main application that can take advantage of this ability, as the number of parameters for NLP models keeps growing at an impressive pace. According to Cerebras, training on a single system reduces the engineering time necessary to run large NLP models from months to minutes, eliminating the partitioning of the model across hundreds or thousands of GPUs. Another element of the solution is Cerebras’ Weight Streaming architecture which disaggregates memory and compute, allowing memory – which is used to store parameters – to grow separately from compute. According to the company, the solutions “democratizes” access to the largest NLP models such as GPT-3 1.3B, GPT-J 6B, GPT-3 13B and GPT-NeoX 20B, making them affordable for a larger number of companies. It also enables users to switch between GPT-J and GPT-Neo, for example, with a few keystrokes, a task that would take months of engineering time to achieve on a cluster of hundreds of GPUs. A DSP with embedded FPGA to allow changeable instruction set Jointly announced by the two partner companies, an SoC where a Flex Logix embedded FPGA (eFPGA) is connected to a CEVA X2 DSP instruction extension interface is “the world’s first” successful silicon implementation of a DSP with a changeable instruction set. In this reconfigurable computing solution, the DSP instruction set architecture can be adapted to different workloads with custom hard-wired instructions that can be changed at any time. 40% metal line resistance reduction using ruthenium and a semi-damascene process Smaller process nodes involve ever thinner metal lines, translating in higher resistance and making it difficult to reduce power consumption. At the recent IEEE International Interconnect Technology Conference, Belgian research institute Imec presented options to reduce the metal line resistance at tight metal pitches, mitigating the resistance/capacitance increase of future interconnects using direct metal patterning. For the first time, high aspect ratio (AR=6) processing of ruthenium in a semi-damascene fashion is experimentally shown to result in about 40% resistance reduction without sacrificing area. In a study presented at the 2022 VLSI conference, Imec also demonstrates functional two-metal-level semi-damascene module with fully self-aligned vias at 18nm metal pitch – again with ruthenium as a metal. Unlike dual-damascene, semi-damascene integration relies on the direct patterning of the interconnect metal for making the lines (referred to as ‘subtractive metallization’), and chemical mechanical polishing of the metal is not needed for completing the process flow. Unlike copper, ruthenium does not require a metal barrier, so the conductive area can be fully utilized by the interconnect metal itself.  Cross-section TEMs of ruthenium lines with 18nm metal pitch. Left: aspect ratio 3. Right: aspect ratio 6. The TEMs demonstrate a nearly vertical profile of the ruthenium lines and scalability of the current scheme towards higher aspect ratios. Copyright: imec PCIe 7.0 to double the data rate PCI-SIG has announced the PCIe 7.0 Specification, which will double the data rate to 128 GT/s. The new standard will use PAM4 signaling (Pulse Amplitude Modulation with four levels). The PCIe 7.0 specification is targeted for release to members in 2025. Acquisitions Accenture has acquired XtremeEDA, (Ottawa, Canada), a provider of silicon design services specializing in digital design verification, processor and system integration and hardware security. The acquisition aims at expanding Accenture Cloud First’s capabilities in edge computing. In 2020 and 2021 Accenture Canada acquired four more Canadian companies: Gevity, Cloudworks, Avenai, and Callisto Integration. |

|

|

|||||

|

|

|||||

|

|||||