EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. Nvidia’s datacenter CPU; fast AI training on x86; Siemens acquires OneSpin; EDA Q4 resultsApril 15th, 2021 by Roberto Frazzoli

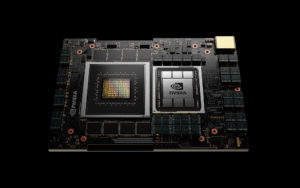

Nvidia entering the datacenter CPU market – and becoming a direct competitor of Intel in this area – is definitely this week’s top news. Unrelated to this announcement, an academic research adds to the debate on heterogeneous compute. More updates this week include an important EDA acquisition and EDA figures; but first, let’s meet Grace. Grace, the new Arm-based Nvidia datacenter CPU Intel’s recently appointed CEO Pat Gelsinger is facing an additional challenge: defending the company’s datacenter CPU market share against Grace, the new Nvidia CPU – that promises 10x the performance of today’s fastest servers on the most complex AI and high performance computing workloads. Announced at the current GTC event and available in the beginning of 2023, the new Arm-based processor is named for Grace Hopper, the U.S. computer-programming pioneer. In his GTC keynote, Nvidia CEO’s Jensen Huang explained that Grace is meant to address the bottleneck that still makes it difficult to process large amounts of data, particularly for AI models. His example was based on half of a DGX system: “Each Ampere GPU is connected to 80GB of super-fast memory running at 2 TB/sec,” he said. “Together, the four Amperes process 320 GB at 8 Terabytes per second. Contrast that with CPU memory, which is 1TB large, but only 0.2 Terabytes per second. The CPU memory is three times larger but forty times slower than the GPU. We would love to utilize the full 1,320 GB of memory in this node to train AI models. So, why not something like this? Make faster CPU memories, connect four channels to the CPU, a dedicated channel to feed each GPU. Even if a package can be made, PCIe is now the bottleneck. We can surely use NVLink. NVLink is fast enough. But no x86 CPU has NVLink, not to mention four NVLinks.” Huang pointed out that Grace is Arm-based and purpose-built for accelerated computing applications of large amounts of data – such as AI. “The Arm core in Grace is a next generation off-the-shelf IP for servers,” he said. “Each CPU will deliver over 300 SPECint with a total of over 2,400 SPECint_rate CPU performance for an 8-GPU DGX. For comparison, todays DGX, the highest performance computer in the world, is 450 SPECint_rate.” He continued, “This powerful, Arm-based CPU gives us the third foundational technology for computing, and the ability to rearchitect every aspect of the data center for AI. (…) Our data center roadmap is now a rhythm consisting of three chips: CPU, GPU, and DPU. Each chip architecture has a two-year rhythm with likely a kicker in between. One year will focus on x86 platforms, one year will focus on Arm platforms. Every year will see new exciting products from us. The Nvidia architecture and platforms will support x86 and Arm – whatever customers and markets prefer,” Huang said. The NVLink interconnect technology provides a 900 GB/s connection between Grace and Nvidia GPUs. Grace will also utilize an LPDDR5x memory subsystem. The new architecture provides unified cache coherence with a single memory address space, combining system and HBM GPU memory. The Swiss National Supercomputing Centre (CSCS) and the U.S. Department of Energy’s Los Alamos National Laboratory are the first to announce plans to build Grace-powered supercomputers. According to Huang, the CSCS supercomputer, called Alps, “will be 20 exaflops for AI, 10 times faster than the world’s fastest supercomputer today.”. The system will be built by HPE and come on-line in 2023.

Fast AI training on x86 architectures But do all datacenters really need to adopt the heterogeneous compute paradigm, i.e. a combination of CPUs, GPUs and DPUs? A paper presented by researcher from Rice University (Houston, Texas) at the recent MLSys Conference (Machine Learning and Systems) shows the possibility of achieving very fast AI training on x86 architectures, suggesting a path to do without other types of processors. The new work builds on the previously developed SLIDE system, which exploits the possibility of a very sparse neural network update depending on the input. “It is quite wasteful to update hundreds of millions of parameters based on mistakes made on a handful of data instances”, the researchers maintain. “Exploiting this sparsity results in significantly cheaper alternatives”. SLIDE leverages probabilistic hash tables to identify very sparse “active” sets of the parameters. This enables efficient and sparse gradient descent via ‘approximate query processing’ – a technique widely studied on x86 hardware – instead of matrix multiplication. With this new paper, the team shows further optimizations by taking advantage of the new AVX-512 (Advanced Vector Extensions) instructions available on Intel’s Cooper Lake and Cascade Lake processors. Results demonstrate training speed improvements ranging from 2x up to 15.5x – depending on the dataset – compared to TensorFlow implementations on Nvidia Tesla V100. “We can probably get away with the burden of handling and maintaining specialized hardware”, the researchers conclude. SambaNova gets additional funding Despite the growing AI processing capabilities of GPUs and CPUs from incumbent market leaders, startups developing specialized AI architectures keep attracting venture capital investments. This, at least, is the case of SambaNova Systems, which has just raised $676 million in a Series D funding round led by SoftBank Vision Fund 2. This brings the company’s total funding to more than $1 billion – making it “the best-funded AI systems and services platform startup in the world”. SambaNova valuation now surpasses $5 billion. Siemens acquires OneSpin Siemens Digital Industries Software has signed an agreement with London-based Azini Capital to acquire OneSpin Solutions, a Munich-based provider of formal assertion-based verification (ABV) software. Siemens plans to add OneSpin technology to its Xcelerator portfolio. The acquisition is expected to close in the second quarter of calendar year 2021. Terms of the transaction are not disclosed. On this deal, John Cooley has just posted his spicy behind-the-scenes backstory. EDA market growth in Q4 2020 According to the latest report from the ESD Alliance, Electronic System Design industry revenue increased by over $1 billion in 2020, marking a new milestone for the industry. Revenue increased 15.4% in Q4 2020 to $3,031.5 million, compared to $2,626.3 million in Q4 2019. The four-quarter moving average rose by 11.6%, the highest annual growth since 2011 and the second highest in the last 14 years. In terms of product category and regions respectively, highest growth rates in Q4 2020 compared to Q4 2019 were achieved by IC Physical Design and Verification (+36.6%) and by Asia Pacific (+23.4%). Upcoming virtual events Ansys Simulation World will take place on April 20-21 for America/EMEA and on April 21-22 for APAC. Embedded IoT World will be online on April 28 and 29. ASMC 2021, SEMI’s Advanced Semiconductor Manufacturing Conference, will run from May 10 to 12, covering topics ranging from yield management and metrology to new developments in improving post-pandemic manufacturing agility. |

|

|

|||||

|

|

|||||

|

|||||