Nvidia entering the datacenter CPU market – and becoming a direct competitor of Intel in this area – is definitely this week’s top news. Unrelated to this announcement, an academic research adds to the debate on heterogeneous compute. More updates this week include an important EDA acquisition and EDA figures; but first, let’s meet Grace.

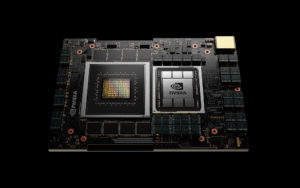

Grace, the new Arm-based Nvidia datacenter CPU

Intel’s recently appointed CEO Pat Gelsinger is facing an additional challenge: defending the company’s datacenter CPU market share against Grace, the new Nvidia CPU – that promises 10x the performance of today’s fastest servers on the most complex AI and high performance computing workloads. Announced at the current GTC event and available in the beginning of 2023, the new Arm-based processor is named for Grace Hopper, the U.S. computer-programming pioneer.

In his GTC keynote, Nvidia CEO’s Jensen Huang explained that Grace is meant to address the bottleneck that still makes it difficult to process large amounts of data, particularly for AI models. His example was based on half of a DGX system: “Each Ampere GPU is connected to 80GB of super-fast memory running at 2 TB/sec,” he said. “Together, the four Amperes process 320 GB at 8 Terabytes per second. Contrast that with CPU memory, which is 1TB large, but only 0.2 Terabytes per second. The CPU memory is three times larger but forty times slower than the GPU. We would love to utilize the full 1,320 GB of memory in this node to train AI models. So, why not something like this? Make faster CPU memories, connect four channels to the CPU, a dedicated channel to feed each GPU. Even if a package can be made, PCIe is now the bottleneck. We can surely use NVLink. NVLink is fast enough. But no x86 CPU has NVLink, not to mention four NVLinks.” Huang pointed out that Grace is Arm-based and purpose-built for accelerated computing applications of large amounts of data – such as AI. “The Arm core in Grace is a next generation off-the-shelf IP for servers,” he said. “Each CPU will deliver over 300 SPECint with a total of over 2,400 SPECint_rate CPU performance for an 8-GPU DGX. For comparison, todays DGX, the highest performance computer in the world, is 450 SPECint_rate.” He continued, “This powerful, Arm-based CPU gives us the third foundational technology for computing, and the ability to rearchitect every aspect of the data center for AI. (…) Our data center roadmap is now a rhythm consisting of three chips: CPU, GPU, and DPU. Each chip architecture has a two-year rhythm with likely a kicker in between. One year will focus on x86 platforms, one year will focus on Arm platforms. Every year will see new exciting products from us. The Nvidia architecture and platforms will support x86 and Arm – whatever customers and markets prefer,” Huang said.

The NVLink interconnect technology provides a 900 GB/s connection between Grace and Nvidia GPUs. Grace will also utilize an LPDDR5x memory subsystem. The new architecture provides unified cache coherence with a single memory address space, combining system and HBM GPU memory.

The Swiss National Supercomputing Centre (CSCS) and the U.S. Department of Energy’s Los Alamos National Laboratory are the first to announce plans to build Grace-powered supercomputers. According to Huang, the CSCS supercomputer, called Alps, “will be 20 exaflops for AI, 10 times faster than the world’s fastest supercomputer today.”. The system will be built by HPE and come on-line in 2023.