Despite the virtual format, the 2020 Fall edition of the Linley Processor Conference – organized by the technology analysis firm Linley Group – is offering its biggest program ever, with 33 technical talks across six days: October 20 to 22, and October 27 to 29. The high number of presentations confirms that these are really exciting times for innovative processing architectures. In his keynote, Linley Gwennap – Principal Analyst of The Linley Group – explained the proliferation of application-specific accelerators describing them as a way to extend Moore’s Law, since moving to the most advanced process nodes now offers little benefit. In this article, EDACafe is providing a quick overview of some of the presentations which were given during the first part of the event, mainly focusing on new announcements; next week we will complete our coverage with an overview of the second part of the conference.

AI-specific architectures: Flex Logix, Brainchip, Groq, Hailo, Cornami

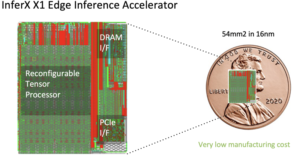

Flex Logix’s InferX X1 chip, announced at the 2019 Linley Fall Conference, is now available. According to the company, the device is 3-18 times more efficient than Nvidia’s GPU architecture for large models with megapixel images, and is also much more efficient in terms of throughput per square millimeter of die area: it measures 54mm2 compared to 545mm2 for Nvidia Tesla T4. Flex Logix claims that the InferX X1 runs faster than Nvdia’s Xavier NX on real customer models at much more attractive prices: from $99 to $199 (1KU quantity), depending on speed grade.

Brainchip, which offers a neuromorphic System-on-Chip introduced at last spring edition of the Linley Conference, has added details on “activation sparsity” and “activity regularization”. Activation sparsity is the percentage of zero-valued entries in the previous layer’s activation maps; higher activation sparsity yields fewer operations. CNNs converted to event-domain (i.e. spiking networks) automatically start at 40-60% activation sparsity due to the use of ReLU and batch normalization; Brainchip further increases activation sparsity by using activity regularization during training. Activity regularization is the process of adding more information to the loss function to balance the model’s accuracy and activation sparsity. Increasing activation sparsity, activity regularization further reduces computation.