EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. Intel and the future of neuromorphic technologiesNovember 22nd, 2019 by Roberto Frazzoli

The Intel Neuromorphic Research Community (INRC) created by Intel to support its Loihi chip has now its first corporate members: Accenture, Airbus, GE and Hitachi. These four companies are planning to explore the potential of neuromorphic technology in a wide range of applications: Accenture Labs is interested in specialized computing and heterogenous hardware for use cases such as smart vehicle interaction, distributed infrastructure monitoring and speech recognition; Airbus, in collaboration with Cardiff University, is looking to advance its existing in-house developed automated malware detection technology, leveraging Loihi’s low-power consumption for constant monitoring; GE will focus on online learning at the edge of the industrial network to enable adaptive controls, autonomous inspection and new capabilities such as real-time inline compression for data storage; Hitachi plans to use Loihi’s potential to recognize and understand the time series data of many high-resolution cameras and sensors quickly. The Intel Neuromorphic Research Community has tripled in size over the past year; members are now more than 75, mostly universities, government labs, neuromorphic start-up companies. These organizations have developed the basic tools, algorithms and methods needed to make Intel’s neuromorphic technology useful in real-world applications. Community members have published several papers on academic and scientific journals; some of these works can be accessed from the INRC website. Now Intel is building on this basic research to win the interest of large corporations. “We are now encouraging commercially-oriented groups to join the community”, said Mike Davies, director of Intel’s Neuromorphic Computing Lab, in the announcement’s press release.

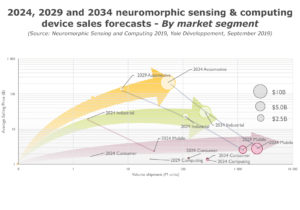

Bridging the gap between academic research and large tech-based businesses Descriptions of potential applications pursued by the new INRC’s corporate members are still quite generic; waiting for these four corporations to come up with real-world applications based on Loihi, as of today the single most interesting aspect of this announcement is probably the fact that Intel’s neuromorphic initiative is bridging the gap between academic research and large tech-based businesses – even though Loihi is not for sale at the moment. Intel’s approach seems to be quite unique, since most of the existing neuromorphic chips are either purely academic efforts (see our overview) or real products offered by small young companies such as BrainChip and aiCTX. And, as of today, the strategy pursued by the other major tech player in the neuromorphic arena – IBM with its TrueNorth chip – seems to be different from Intel’s. Will the neuromorphic market take off? The real potential of neuromorphic technology is still a much-debated issue in the AI community. According to a recent report from market research firm Yole Développement, though, the neuromorphic market is set to take off after 2024, with a significant growth in the following decade. Yole’s forecast at the packaged semiconductor level is the following: neuromorphic sensing will grow from $43M in 2024 to $2.1B in 2029 ($4.7B in 2034); neuromorphic computing will grow from $69M in 2024 to $5.0B in 2029 ($21.3B in 2034). The basic assumption behind Yole’s forecast is that the current “brute force computing” approach to AI (non-neuromorphic) is facing three hurdles: “First, the economics of Moore’s Law make it very difficult for a start-up to compete in the AI space and therefore is limiting competition. Second, data overflow makes current memory technologies a limiting factor. And third, the exponential increase in computing power requirements has created a ‘heat wall’ for each application.” Hence, “From the technology point of view, all the attributes of a disruptive scenario is here. The current deep learning paradigm only serve certain uses cases. Neuromorphic approaches (…) have their own specific benefits which will address new use cases and may grow unnoticed before they become mandatory and ultimately dominant,” Yole’s analysists conclude. Open questions Will this scenario turn into reality? Many fundamental questions are still waiting for their answers, both from neurosciences and from semiconductor technologies. Spiking neural networks are ‘the technology of choice’ for biological brains, but is our current knowledge of biological brains deep enough to extract models? Is the incredible complexity of nervous tissues just a side effect of using ‘meat’ as a construction material, or is it relevant to the functions performed? On the other hand, semiconductor technologies have proven capable of overcoming Moore’s Law-related hurdles many times before. Examples of these last-minute rescues include quadruple patterning and, lately, EUV lithography. Can’t we expect future nanoelectronics solutions to prolong the life of the “brute force computing” approach to AI? Already today, different architectures lead to extremely different performances in non-neuromorphic AI acceleration: for example (as reported by EDACafe a few weeks ago), Flex Logix claims that its InferX X1 chip has only 7% of the TOPS and 5% of the DRAM bandwidth of Tesla T4, yet it has 75% of the T4 inference performance running YOLOv3 at two megapixels. And, according to its critics, the neuromorphic approach is not well suited to digital technologies as it involves the overhead of coding data into spikes – while low power event-based processing can be performed even without using spiking neural networks. In other words, factors potentially affecting the growth of the market are many. Pursuing AI performance VS studying biological brains Undoubtedly, over the next few years the evolution of the AI market will enable analysts to confirm or refine their forecasts. In the meantime, an interesting aspect of Intel’s neuromorphic initiative is its potential to keep the relationship between AI and brain research alive. Starting from the publication of AlexNet in 2012, neural networks have grown into an industry by itself, with its own ecosystem, benchmarks, etc. Clearly, this industry is only interested in AI performance, which – on the inference side – means the number of inferences per second, per Watt and per dollar. AI’s original link to brain research often looks like a pale memory today. But the effort to ‘reverse engineer’ biological brains remains an extremely interesting, fascinating and important area of research, with potential applications in artificial intelligence. Putting neuromorphic technologies to the test in real-world applications – on a large scale, involving large corporations – could also be a way to reinvigorate the mutually beneficial cross-fertilization between artificial intelligence and brain research. |

|

|

|||||

|

|

|||||

|

|||||