EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. Special Report: Present and Future of the EDA Oligopoly – Part TwoApril 27th, 2023 by Roberto Frazzoli

Will the market dominance of EDA’s “big three” remain immune from the new factors that are impacting the semiconductor ecosystem? We tried to answer this question with the help of Laurie Balch (Pedestal Research), Harry Foster (Siemens EDA), David Kanter (MLCommons), KT Moore (Cadence), Wally Rhines (Cornami) In Part One of this special report we focused on the factors of stability that have contributed to the creation of the EDA oligopoly (Cadence, Siemens EDA and Synopsys, of course) and still underpin the incumbents’ market dominance: a certain degree of complementarity among the ‘big three’ product offerings, and the high cost incurred by customers if they want to switch from one EDA vendor to another. We also examined the overall performance of EDA solutions for IC/ASIC design through the findings of the 2022 Wilson Research Group Functional Verification Study, which shows – on average – a 24 percent rate of first silicon success and a 33 percent rate of project completion without schedule slips. Additionally, we discussed the attitude of the EDA industry towards benchmarking and open-source tools. In this second part of our report we will address some of the criticisms that have been raised against the EDA oligopoly and will consider some potential factors of change. We will do that, again, through interviews with EDA professionals (Harry Foster, Chief Scientist Verification at Siemens EDA; KT Moore, VP of corporate marketing at Cadence; Wally Rhines, CEO at Cornami and formerly CEO at Mentor Graphics for twenty-four years), an EDA market analyst (Laurie Balch, Research Director at Pedestal Research) and an executive from a benchmarking consortium (David Kanter, Executive Director at MLCommons). A different view on the EDA status quo: DARPA and the OpenRoad project Is the vendor oligopoly a positive or a negative thing for the EDA users? Over the past few years, DARPA (Defense Advanced Research Projects Agency) has often expressed opinions that are relevant for a discussion on this topic. In particular, the EDA theme has been addressed by Serge Leef when he was Program Manager of the DARPA’s Microsystems Technology Office. Other relevant concepts can be found in the OpenRoad project lead by Professor Andrew Kahng from UC San Diego, one of the open-source EDA initiatives supported by DARPA. Unfortunately, neither Serge Leef – who left DARPA last year – nor any other DARPA spokesperson, nor Professor Kahng could be reached for comments, so here we will refer to documents that are publicly available online.

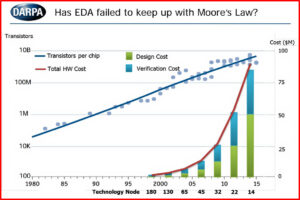

A very well-known position expressed by DARPA refers to the rising cost of EDA tools, which the agency criticized with this famous 2018 slide: The cost factor is one of the aspects on which the positions expressed by DARPA and by the EDA industry diverge. Wally Rhines offers an explanation for the high cost experienced by governmental EDA users, and rejects the notion of ever-increasing prices for the mainstream EDA customers: “The U.S. government has been unable to pool its purchasing power and thus pays higher rates for EDA software than large semiconductor companies. EDA product pricing has consistently been on a learning curve with the software cost per transistor decreasing at the same rate that manufacturing costs per transistor decrease,” he says. On at least one occasion, however, DARPA did recognize that the price problem for governmental EDA users originates from fragmented purchases. In a video from the 2021 ERI (Electronics Resurgence Initiative) Summit, Serge Leef observed that “the government ecosystem is (…) not organized as a single purchasing or negotiating entity (…) and because the government doesn’t really negotiate as a group we end up with many different complicated expensive deals which essentially creates a barrier for the government ecosystem that prevents it from benefiting from state-of-the-art design technologies.” In fact, the cost factor alone cannot explain DARPA’s support for open-source EDA initiatives. The other reason can be found in the position expressed by the agency about the innovation capability in the EDA status quo. Again at the 2021 ERI Summit, in a workshop, Leef observed that “EDA startups can’t attract venture financing as they can only grow to about $10M before needing a global sales channel,” and “These forces result in high prices and innovation stagnation and there have been few transformative technical advances since 1988.” Consequently – at least in 2021 – DARPA hoped to both “improve access and fuel advances through open-source EDA technologies.” So the programs set up by the agency are tasked with extremely ambitious goals: create “beyond state-of-the-art design technologies that would deliver no human in the loop 24-hour turnaround for a number of tasks associated with design of complex chips,” as Leef explained in the above-mentioned video. The targets set by DARPA are reflected in the OpenRoad project led by UC San Diego. As stated on the project’s website, “OpenROAD tool and flow provide autonomous, no-human-in-the-loop, 24-hour RTL-GDSII capability to support low-overhead design exploration and implementation through tapeout.” And these capabilities are being tested not just on old nodes, but also on relatively advanced processes: OpenRoad has recently held a 7-nanometer contest, using a Risc-V design and the ASAP7 PDK (a predictive process design kit based on current assumptions for the 7-nm technology node, but not tied to any specific foundry). For an update on OpenRoad’s vision, here is the presentation given at the recent workshop on Open Source Design Automation hosted by the DATE Conference. Is the oligopoly slowing down EDA innovation? Innovation is an aspect on which opinions about the EDA status quo are diametrically opposite. Is EDA innovation stagnating? Or, on the contrary, is it running fast thanks to the high profitability enjoyed by the ‘big three’? Undoubtedly, the incumbents invest significant resources: according to studies, EDA suppliers typically spend more than 35 percent of revenues on research and development. And EDA users keep taping out ever bigger and ever more complex chips, which they could not do if EDA was lagging. “I don’t think [the oligopoly] slows down innovation”, says KT Moore. He recalls that design goals moved from just performance in the 80s to PPA, and more. “Over the years, the vectors along which we have to optimize for continue to expand. Within power performance area, there are subcategories like crosstalk, signal integrity, static timing, thermal analysis. (…) Now you cannot really consider the complete design of a chip without thinking about the multiphysics effects, without thinking about thermal, especially when you’re looking at things like advanced packaging, as well as board design and system design.” In terms of innovation, there is no doubt that all major EDA vendors are leveraging artificial intelligence techniques, which promise transformative advancements. According to Foster, AI will also help address the problem of verification productivity: “As we introduce new [verification] technologies, they improve the quality of the verification process (…) but they also add extra work. They’re not necessarily improving productivity, they’re improving quality. I’m optimistic in a lot of the research that’s going on now in terms of introducing AI and ML techniques to essentially help us with a lot of these processes to improve productivity in addition to quality. (…) I don’t believe we’re going to solve this problem purely by speeding up a simulator. We always are constantly speeding up simulation, but that will not cut it. Emulation has become incredibly important, particularly on SoC class designs. But again, to get that next level does require some change in the way that we think about how to do this thing. And so I’m optimistic about a lot of the stuff in ML and AI.” More innovation efforts can be expected of EDA incumbents to address the problems we discussed in Part One, schedule slips and silicon re-spins. The agenda may include moving to higher levels of abstraction. “We need to rethink the way that we do design, to prevent bugs,” says Foster. “For example, wherever we can move to a higher level language, that will enable us to achieve some of these goals. And the reason for that is that historically, we find between fifteen and fifty bugs per one thousand lines of code. (…) If I can describe the same design in a hundred lines of code, I’m still going to have that proportion of fifteen to fifty bugs per one thousand lines of code, but it’s fewer lines of code, which means I’m introducing fewer bugs in the design.” Besides the incumbents’ own innovation capabilities, both Rhines and Foster underline that the EDA oligopoly has never prevented the birth of many innovative EDA startups. “Any lack of innovation in EDA has been offset by the fertile environment for startups that require very little venture capital to become viable,” says Rhines. And Foster elaborates on the same point: “I don’t think [the oligopoly] has slowed down the innovation, if you look at startups that have occurred over the past twenty years. Let’s face it, a lot of the technologies that are commonplace today started out as startups. (…) So I think that aspect is pretty healthy. (…) I’m dealing with a lot of startups right now that are doing some pretty innovative stuff.” Foster also points out that this year’s Design Automation Conference has received the largest number of submissions ever. “So I know the research community is incredibly healthy in terms of design automation research,” he adds. Getting back to the initial question on innovation capabilities, then, one could probably say that the EDA ecosystem as a whole (incumbents plus startups) is performing well. This, however, is a peculiar situation, as the oligopoly – despite its dominance and profitability – is ‘outsourcing’ part of the innovation burden to other subjects. These peculiarities of the EDA industry are attracting the attention of academic researchers, and go beyond the goals of this article. Usefulness of benchmarking: the MLCommons standpoint The EDA professionals and market analyst we talked to in Part One unanimously believe that benchmarks would be of little use in EDA, because of the wide diversity of designs and goals. They also believe that publicly recognized, third-party benchmarks would be difficult to create in EDA, due to the lack of publicly available datasets. Are these hurdles fundamentally different from the ones found in the industries that do have benchmarks and use them on a regular basis? Trying to answer this question, we talked to David Kanter, Executive Director at MLCommons, the engineering consortium behind the MLPerf machine learning benchmarks. While any comparison between EDA and machine learning acceleration would be inappropriate, it’s interesting to delve into the reasons that drove the ML community – in a few years’ timeframe – to develop and embrace a wide range of benchmarks managed by a neutral organization. As Kanter recalls, MLPerf benchmarks were born out of a shared need within the ML community: “Before MLPerf, people would be making comparisons that were totally ridiculous. There was a very clear need for benchmarks,” Kanter says. “MLPerf started by bringing together some vendors and some academics in a room. (…) The first meeting was six or seven people; we had all worked on this problem, we think that it’s an important problem, we all see a need for benchmarks. Everyone was bought into that vision. After that, we put out a call to the broader ML community, and the next meeting after that wasn’t six people, it was like sixty people.” And the group included representatives from all categories of stakeholders: “Google is both an ML purchaser and a vendor, they do their own TPUs; Nirvana System, a startup, was a vendor; Baidu Research in Silicon Valley was involved from the very start, they are consumers; the academics were folks who are both on the consumer and on the designer side.” MLCommons, too, was confronted with the problem of users’ goal diversity: “When you are making a benchmark”, says Kanter, “there is always this intrinsic tension between being general – trying to apply to many people and make a useful tool – and being as accurate as possible. You always have to sacrifice some level of accuracy to get that generality.” However, benchmarks can be designed to be as representative as possible of real-life applications: “We pick benchmarks that we know are commercially relevant. We picked recommendation as we know it’s an important workload; we picked NLP using BERT because we know it’s an important workload. We talked to customers about it,” Kanter points out. If benchmarks necessarily trade some accuracy for generality, how useful are they? “I think benchmarks have a huge amount of value in a number of different ways,” Kanter maintains. “If you are a large company you can probably have a dozen engineers just trying out EDA tools to figure out which one is best. Benchmarks will still be very helpful for folks like those. For example, Meta is a member of MLCommons; they have a whole team of people who evaluate ML solutions, but they still use MLPerf as a screening pass. But if you are a smaller company, I actually think benchmarks become more informative because as an engineer you may not have a lot of time to make these decisions, and some data that is carefully constructed to be representative may just be better than no data. (…) There are tradeoffs with benchmarks, but some data is better than no data.” Kanter confirms that publicly available datasets are a key requirement for the creation of benchmarks, but he thinks that it would be possible to get around this obstacle: “At MLCommons we can only build benchmarks where we have datasets. And they need to be datasets that are available to everyone. So in EDA you can’t take a proprietary CPU and just run the benchmark, because just one company would have access to that. Even something like a standard IP core, that anyone can license, it’s not actually open and available. But there are plenty of open-source designs, to my understanding. Certainly to my knowledge there are many open RISC-V designs you can take off the shelf, and I don’t see why you couldn’t make a benchmark with that,” he observes. However, the increasing use of ML techniques within EDA might potentially change the EDA industry’s attitude towards benchmarking and towards the sharing of datasets. Recalling a discussion held at a recent National Science Foundation workshop, Foster observes a growing awareness for new needs: “What came out of this workshop that I felt was valuable, was that to get the most out of ML and AI [in EDA] we need to essentially come to some agreement on infrastructure,” he says. This need for a shared infrastructure is felt when evaluating the results obtained by different organizations using ML in EDA: “No one can reproduce those results because there’s no concept of the data that we use to train that can be leveraged. They don’t know if their algorithm’s better or not,” says Foster. “That’s part of that infrastructure we all felt that we need to solve in the future to improve our overall verification. (…) Part of the problem in starting to introduce these ML solutions is the lack of data that is available that has been contributed to by industry. Let’s say, some big processor company or maybe some EDA company where the data has been contributed, where we can actually start benchmarking across multiple algorithms. That has not existed in the EDA world at all. It has existed in other domains.” An organization that has started addressing these problems is Si2 with its Artificial Intelligence/Machine Learning Special Interest Group. The China factor Another new factor that could potentially impact the EDA industry’s status quo is the geopolitical tension between the US and China. On the one hand, China is investing heavily on EDA startups – to gain independence from the US – and in theory some Chinese EDA players could become a serious challenger on the global market, threatening the ‘big three’ dominance. On the other hand, geopolitical tensions could turn into an additional factor of stability for the oligopoly, since the US government is using EDA as a chokepoint to curb China’s semiconductor ambitions: we are referring to the export restrictions on the EDA tools that are needed to design GAA transistors. Clearly, for the chokepoint to be effective, the oligopoly must continue to exist. Additionally, the new protectionist wave could discourage investments on open-source EDA, as it could potentially benefit China. The first scenario (China developing a world-class EDA challenger in the short term) is generally considered unlikely. According to Rhines, “While the emergence of EDA companies in China will have some impact, the bigger impact is probably the increase in percentage of China usage of EDA software through piracy rather than purchase.” A 2021 joint study from two ‘think tanks’ (Germany’s Stiftung Neue Verantwortung and Mercator Institute for China Studies) came to similar conclusions: “Even (…) with abundant funding, Chinese EDA firms are unlikely to be able to provide solutions for chips at the global cutting edge. There is also a risk that lavish state-led funding and guaranteed procurement will lead Chinese EDA firms to plateau comfortably at capabilities that trail the global technical frontier, a result with many precedents in different ICT sectors in China. Another critical factor would be availability of the Big Three’s tools despite expanded U.S. export controls. This availability might remain extensive, for several reasons. As EDA tools are software, they can be hacked; Synopsys has already sued one Chinese business for such behavior. Alternatively, the Big Three might ignore piracy, because even illegal use of their tools in China works against the rise of Chinese competitors.” Based on the rationale of export restrictions on EDA tools, one could assume that piracy would enable China to catch up with GAA transistors in a short time. However, according to Rhines, the real barrier on this road is manufacturing equipment: “Chinese companies are slowly developing viable EDA design tools although probably not for 7nm design and below. That will continue. Export restrictions on GAA affect the libraries and models associated with specific GAA process rather than the overall toolset that is used. If foundries with GAA capability emerge in China, they can probably provide these libraries. GAA capability in China is doubtful in the near future because of manufacturing equipment limitations rather than the limitations of available EDA tools,” he says. In more general terms, KT Moore underlines the size of the gap that separates the EDA incumbents from any new challengers: “In general, for anyone to catch up, it’s going to take a lot of work. (…) If you look at the number of people that Cadence employs, or the number of people that our competitors employ, it’s in the tens of thousands. And it’s not just the sheer numbers that matter, it’s also the time investment that those numbers represent. We’re talking years of learning across multiple manufacturing processes, multiple design methodologies, multiple products in these vertical markets that we support. So I would say that is a lofty goal to come in as a new entrant into this market. And I think it’s very aggressive to think that any catch-up can be made in short order.” Foster has a similar opinion: “I think a lot of people underestimate the amount of work and research to go into all these EDA solutions, it takes years and years. So I just don’t see that happening overnight.” Another debatable point is the role of open-source EDA in the context of US-China tensions. Another paper from the above-mentioned ‘Stiftung Neue Verantwortung’ think tank recommends strengthening incentives for the development and adoption of open-source EDA tools as a way to democratizes chip design “at the trailing-edge and mature nodes – of high relevance for industrial, automotive, and health applications.” Apart from the fact that mature node does not necessarily equate to relaxed requirements (for example, automotive chips have very stringent safety requirements), the assumption of open-source EDA only targeting mature nodes is debatable. As we saw, the OpenRoad project is already targeting the 7-nanometer node and is aiming at “beyond state-of-the-art” design technologies. With China extensively leveraging the Risc-V open-source ISA, one could expect some debate on open-source EDA initiatives. More factors: foundries, hyperscalers, cloud-based EDA Lastly, let’s quickly review three additional factors that could potentially impact the EDA oligopoly in the future. Among them, the role of foundries in an era that some analysts are describing as de-globalization or even “war for technology”. The leading Asian foundries rely on U.S. EDA tools to enable their customers to take advantage of their advanced processes. Could one envision foundries to start playing an active role in EDA? “It’s doubtful,” says Rhines. “Previous attempts (think LSI Logic or VLSI Technology in the 1980s) have largely failed. EDA requires independence from foundries so that customers can choose the foundry that is best suited to their product requirements. TSMC has wisely focused upon being a foundry and working with a variety of EDA companies. I don’t think that paradigm will change.” As for the rise of hyperscalers, a disruptive change for many industries, so far this event has actually reinforced the EDA oligopoly, in terms of revenues. As Rhines observes, “The growth of the EDA market in the last five years has come primarily from the entry of systems companies into IC design, e.g. Google, Facebook, Amazon, etc. None of the factors you mention [events and trends affecting the semiconductor ecosystem, editor’s note] are significantly impacting the attractiveness of information technology companies doing custom IC designs.” Some changes, however, can be expected from the third factor: the growth of cloud-based EDA. This way of delivering EDA functions seems to have the potential for weakening one of the factors of oligopoly stability: the barriers that make it difficult for users to switch from one vendor to another. “I think the fact that tools are beginning to move to the cloud more changes the calculus a little bit,” says Balch. “Depending on your particular setup, it may not be your internal CAD team that you’re relying on to establish those integrations and build those new interactions between tools. There may be third parties involved. Some of that integration may be provided by your EDA suppliers. (…) I don’t think we’ve seen, at this point in time, that [the cloud] has made a significant impact on tool upgrade decisions, but it is potentially one element that can change how difficult it is for people to upgrade tools.” Conclusion We started Part One of this special report by quoting a 2019 article by Wally Rhines about the EDA oligopoly. Answering our questions, Rhines essentially confirms that analysis and does not expect any major changes: “There have been three big EDA companies whose combined share was more than 50% of the market through most of EDA history, e.g. Daisy/Mentor/Valid, Cadence/Mentor/Synopsys and almost this much dominance with Computervision/Calma/Applicon. This seems to be a comfortable number for most customers,” he says. Laurie Balch expresses a similar opinion: “The EDA industry has been dominated by those three companies [Cadence, Mentor, Synopsys] for twenty years,” she says. “There were some more meaningful size players in the market [in the past], but through consolidation and changes in the industry, it has been pretty stable at the top for a long time. I don’t anticipate that that is going to significantly change in the immediate future. I think that that will remain pretty stable for quite a few years to come. There’s no particular disruption on the horizon that would indicate some major shift at the top of market.” Expectations of stability in the short term also emerge, overall, from the different aspects we briefly examined with the help of the other experts we talked to in this special report. This certainly makes sense. However, the world has changed significantly over the past few years, and some of these changes have already started impacting EDA. In particular, to some extent EDA is becoming an AI-based industry, and hyperscalers – probably some of the most powerful companies in the world – have started designing chips. On a longer term, this could probably lead to new scenarios. On the one hand, while EDA vendors are brilliantly taking advantage of AI techniques, they do not lead the artificial intelligence world. On the other hand, hyperscalers are a new type of EDA user: they are vertically integrated, and have the tendency to disrupt other industries. On a longer term, the overall EDA scenario could change also through unexpected acquisitions fueled by the power of AI: think for example – in a different industry – about Nvidia’s attempt to buy Arm. But, of course, all these are pure speculations. |

|

|

|||||

|

|

|||||

|

|||||