EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. Special Report: Present and Future of the EDA Oligopoly – Part OneApril 25th, 2023 by Roberto Frazzoli

Will the market dominance of EDA’s “big three” remain immune from the new factors that are impacting the semiconductor ecosystem? We tried to answer this question with the help of Laurie Balch (Pedestal Research), Harry Foster (Siemens EDA), David Kanter (MLCommons), KT Moore (Cadence), Wally Rhines (Cornami) The global EDA market is notoriously dominated by just three U.S.-based major vendors: Cadence, Siemens EDA and Synopsys, of course. This status quo has been stable for decades; will it continue to be this stable in the future? Several new events and trends have recently started impacting the semiconductor ecosystem: US-China tensions, the AI boom, the growth of hyperscalers, massive subsidies supporting the construction of new fabs around the world etc. Will any of these new events have an impact on the EDA oligopoly? We tried to answer this question by interviewing EDA professionals (Harry Foster, Chief Scientist Verification at Siemens EDA; KT Moore, VP of corporate marketing at Cadence; Wally Rhines, CEO at Cornami and formerly CEO at Mentor Graphics for twenty-four years), an EDA market analyst (Laurie Balch, Research Director at Pedestal Research) and an executive from a benchmarking consortium (David Kanter, Executive Director at MLCommons, whose contribution will be featured in the next part of this report). In this first part, we will delve deeper into the factors of stability that have contributed to the creation of the oligopoly and still underpin the incumbents’ market dominance. Adding to the description of the status quo, we will also focus on the overall performance of EDA solutions, as well as on the attitude of the EDA industry towards benchmarking and open-source tools. The second part of our report will address some of the criticisms that have been raised against the EDA oligopoly and will consider some potential factors of change.

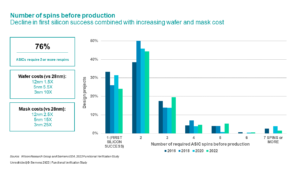

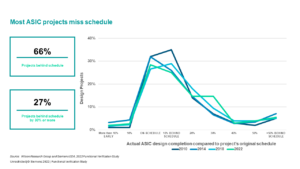

A good starting point is a 2019 article by Wally Rhines – part of which we will briefly summarize here, as far as factors of stability are concerned. Rhines observed that Mentor, Cadence and Synopsys have been an oligopoly since the late 1980’s. One factor of stability stems from the fact that, within any one EDA product category, there is a dominant vendor which becomes a defacto supplier and therefore can spend more on R&D and support than its competitors. Another factor of stability is the very high switching costs for users to move between EDA suppliers, given the infrastructure of connected utilities and the familiarization required to adopt a specific vendor’s software. As for the first of these two factors it should be noted – as Daniel Nenni explained in this article – that the reliability of golden signoff tools rests on reputations and experience built up over decades of use and collaboration with foundries. To complete this brief premise, let’s recall that foundries share their notoriously proprietary PDKs only with a small number of selected EDA vendors. The analyses above are undoubtedly accurate and compelling. Still, some questions remain unanswered if we consider EDA in the context of the semiconductor ecosystem which is notoriously ‘unstable’ – so to speak – first of all in terms of its fast technological advancements, and also in terms of market cycles. Why are the factors of stability underpinning the EDA oligopoly so strong and durable as to protect the incumbents’ ‘sweet spot’ for over three decades? Competition versus complementarity within the oligopoly One of the aspects that are worth a deeper analysis is the fact that the product offerings of the “big three” are, to some extent, complementary. Laurie Balch provides a detailed description of this aspect: “When you start to dive deeper into the EDA landscape,” she says, “you start to see that most of the vendors are not providing tools in every area. So it would actually be difficult to have an end-to-end solution provided by just a single EDA supplier. (…) Each of the EDA vendors have different areas in which they specialize, different areas in which they are best-in-class, where they really dominate, and it really requires a deeper look into all of the subspecialty product areas within EDA. This is a very fragmented market in that sense, because EDA encompasses several dozen different types of design tools, and they are all very unique from one another. Synopsys doesn’t touch PCB design at all, so that is one very obvious distinction among the three vendors, but Synopsys has tools now focused on software design that Cadence and Siemens do not.” This is where another factor comes into play: the different customer requirements, leading to different tradeoffs between tool performance and tool integration effort. “Ultimately, every user’s evaluation is different,” Balch observes, “and to the extent that a user can maximize a single supplier’s tool suite and use tools that are provided by just a single EDA vendor, there’s less integration that they need to do. So there is a benefit there when they can utilize a single provider’s tools. But again, depending on their exact design requirements, that may not be giving them exactly what they need. Unlike a lot of other types of enterprise tools that organizations purchase, EDA is an area where the tool suites and the individual point tools that you purchase are really going to be evaluated on a very individualized scenario basis.” Customer diversity also explains why, over the years, each major vendor has tried to expand its coverage of the design/verification flow as much as possible, instead of focusing its resources on just the tools where it is best-in-class. “I think any EDA vendor you ask will say that they would love to become number one in every category,” says Balch, “but I think what it boils down to is that they have staked out different positions in catering to users with particular sets of requirements. And so, in any category you might look at, Company A’s tool is more desirable for those creating certain type of semiconductor product or electronics product, and Company B’s version of the tool is optimized for a different set of needs. So I think that they, in many ways, are complementary to one another in the market, and no one tool can be optimal for everyone. It behooves the user base to have different options, because their needs today may not be their needs five years from now when they’re designing a different set of products with different types of design requirements. And having providers that specialize in or are optimized for different types of design work, make sure that everyone has something available to them that is suited for their own design requirements.” The costs of switching from one EDA vendor to another Disruptive innovations, bold visions, a risk-taking attitude: these are usually considered some of Silicon Valley’s founding values. So it’s kind of ironic that EDA, a key enabling technology for the whole semiconductor industry, enjoys a stability that – besides other factors – rests upon users’ reluctancy to switch from one EDA vendor to another. This is why switching barriers deserve a deeper analysis. “Most users are using a medley of tools from various providers,” Balch points out. “Particularly at the upper tiers of the market, among the very high-power users, they are not reliant on just a single vendor for the entire tool suite. They are picking best-of-class tools in all the different categories of tools that they use and then integrating them into a tool flow. And so I think that is one of the major reasons. It’s not just that engineers are reluctant to change. Yes, that’s certainly true. Once engineers are familiar with how to use a certain set of tools, they would prefer to stick with that. But it’s much more than that. It’s very much also tied to the fact that once you’ve linked together all of your tools into your unified tool methodology, your unified tool flow, breaking all of those connections and having to rebuild those integrations is time consuming and involves significant effort, and has impacts on your ability to reuse designs from the past. If you would like to be able to make use of your prior work and capitalize on the investment you’ve already made in designing prior chips or boards, being able to continue to use the tools on which they were built is helpful to you. (…) So all of those things contribute to a reluctance to switch tools, unless it’s absolutely necessary. And generally, it becomes absolutely necessary for the power users first, because they have the most demanding needs for their design capabilities.” Answering our questions, Rhines underlines the engineers’ role: “Once a tool is well accepted it’s difficult for engineers to adopt a new one, or for companies to implement an alternative design flow,” he says. “I’ve found that engineers generally believe that all EDA tools have their idiosyncrasies and that it’s easier to deal with the one I know than to learn the issues with a new one.” Given the existence of these high barriers, change is infrequent and mostly limited to specific cases. “Change does, however, occur”, Rhines observes. “If an existing tool flow is simply unable to handle the complexity of a new design, the design engineers are forced to consider an alternative. Sometimes, a company tries to force change top-down because of a unique deal they’ve made with an EDA vendor. I’ve found that these changes rarely work and they create a lot of discontent (and productivity loss) with the design engineers. For voluntary design tool changes that are not forced by total failure of an existing flow, the most common cases seem to be changes in tools that are easier to learn and easier to compare. Place and route comes to mind. Selection of the place and route tool sometimes changes when going to a more advanced, or different, technology node; meaningful comparisons can be done on trial layouts in this case.” Customer satisfaction, schedule slips, silicon re-spins Provocatively, one could say that “high switching barriers” is just another name for “vendor lock-in”, a situation usually considered non-desirable for a customer. In theory, EDA users might be asking for lower switching barriers through user-friendly tools and more effective tool integration standards. Unless the performance that users get from the status quo is so satisfactory that they are happy to give up a bit of their freedom. Is this the case? This is another question that deserves some investigation, given that the EDA solutions for IC/ASIC design can only ensure – on average – a 24 percent rate of first silicon success and only 33 percent rate of project completion without schedule slips. These figures, in fact, are some of the key findings from the 2022 Wilson Research Group Functional Verification Study, a user survey commissioned by Siemens EDA. Besides the above-mentioned metrics, the study – architected by Harry Foster – provides a lot of interesting and detailed information that can be accessed from this Foster’s blog posts on the Siemens EDA website. Answering our questions, Foster points out that over the years the EDA industry has been able to keep pace with Moore’s law: “We’ve been incredibly successful in terms of productivity, in terms of design. And the way I quantify that, if you look from 2007 to 2022, we went through multiple iterations of Moore’s law, you could argue five or six iterations of Moore’s law during that period. But we only increased design engineers by 50%.” However, things look different when it comes to verification: “During the same period, we increased verification engineers by 145%. And so, what’s going on is that design essentially historically has grown at a Moore’s law rate, verification grows at a double exponential rate,” he adds. An interesting point when it comes to assessing the overall performance of the EDA solutions is the comparison of different editions of the Wilson Research Group study. “I think observing trends is pretty important,” says Foster. “For example, the trend in terms of this decline for silicon success. Historically, going back to 2020, it’s been approximately 30% of projects that were able to achieve first silicon success. But just recently, since 2016, we are starting to see a decline, and in fact in the 2022 study this was the lowest we’ve ever seen, 24%.” According to Rhines, however, a deeper look at the above-mentioned study would reveal positive performance results: “Buried in the detail of the Wilson Research report is the fact that teams who use emulation achieve more than a 70% first pass success rate. Those that use both emulation and FPGA prototyping do much better although WRG doesn’t quantify it. In these cases, if there is a re-spin, it’s for things like yield improvement or parametric adjustments. The EDA industry needs to continue its progress improving PPA, especially with the newer AI-driven tools.” Balch recognizes that re-spins are a serious problem but is optimistic: “This is not a new problem at all. Having to do design re-spins has been a major contentious issue in the industry for decades. And the issue is that the cost of doing design re-spins keeps getting higher”, Balch says, referring not just to the need to produce new masks but also to the risk of missing a market window for a new chip. However, “I don’t think that design engineers are any more unhappy now,” she adds. “I don’t think that there’s a noticeable difference in them being especially unhappy now. I think that tools continue to be introduced that help with improving design cycle times, and improve engineer’s ability to design correctly the first time, to remove issues through the design process the first time around, but perfection is hard to achieve in any area. (…) There are many types of new EDA tools and methodologies that can help address this, and adoption of those is critical to reducing design re-spins. But adoption can often be slow going. (…) There are relatively few users who are using everything available to them, because there is a learning curve involved, in addition to the cost of having to adopt new design tools and train on new design tools. There’s a lot of upfront overhead there to switch the way you do things. But we do see that these new methodologies are gaining traction in the market and are being adopted successfully and are seeing results in improving the way design happens. So I have hope. I have very optimistic views as to how all of these technologies will improve the ability of design engineers to design successfully from the get-go.” KT Moore adds a different perspective: “There are many external factors that introduce and cause schedule slips apart from EDA,” he says. “[For example, customers] might change their requirements. This happens a lot. (…) They might have missed something in their market analysis, or there might be a shift in direction and strategy. You might have delays because maybe you weren’t staffed properly, and you’re trying to do too much with too little. And you underestimate the amount of time it takes to go from a hierarchical high-level description through implementation, through manufacturing. So there’s a number of reasons why that happens. What I feel, our job is to make sure that as those things come up, our customers can pivot quickly and adapt to whatever changes are impacting their schedule.” The lack of third-party EDA benchmarks A description of the EDA status quo should also include the non-existence of third-party, public EDA benchmarks, recognized and supported not just by academic researchers developing open-source EDA tools but also by the ‘big three’ vendors. Rhines, Foster, Balch and KT Moore agree in saying that benchmarks would be of little use in EDA, and they would also be difficult to create. “Benchmark comparisons are always interesting but difficult to use in EDA because of the differences by which engineers approach design problems and interface with applications,” says Rhines. “Even simple performance benchmarks tend to be difficult to use because there are so many conditions that may be different for different types of designs. User reviews seem to be the most useful for those who are evaluating new design tool alternatives.” Foster expresses a similar opinion about using, for example, the implementation of a Risc-V design as a benchmark: “There’s no guarantee that that would necessarily be optimal for my particular design. (…) Customers do have to try their own design, their own flow, and figure out which tools work best for their designs.” Balch also agrees: “Universal benchmarks are very, very difficult in EDA because the particular design challenges that any one user has are different from other users. Everyone is doing something unique. And while there may be similarities, it’s very challenging to make apples to apples comparisons between different users’ experiences because what they’re employing the tools to be able to accomplish is so diverse. So I don’t think it’s quite so easy to have standardized benchmarks for EDA tools as it may be for other industries that have a more universal way of utilizing tools.” Similar concepts are reiterated by KT Moore: “Benchmarking gives you less information than an evaluation. (…) From a customer perspective [benchmarks are] fine, and that’s a good, entry level kind of thing to check the box. But what customers really care about is: how is it going to work for me? So that’s, to me, the difference between a benchmark and an evaluation. Customers generally evaluate based on their reference point, how they are going to see a performance improvement. That, I think, has been pretty consistent over my experience in the last twenty years or more. Customers will want to compare [the tool] to some data reference that they trust, that they’re familiar with.” One additional reason cited by EDA professionals to explain the non-existence of widely recognized EDA benchmarks is the lack of publicly available datasets. This aspect is underlined by Foster: “Each EDA vendor has their own internal benchmarks based on their customers designs, and they tune and optimize their EDA tools to those designs. Unfortunately, those designs can’t be shared. So we lack data to come up with a common set in terms of benchmark.” Marginal role of open-source EDA tools Another aspect of the EDA status quo is the marginal role played by open-source EDA tools. While there is no lack of brilliant examples of open-source EDA initiatives, tools and applications, as of today none of them has managed to become a success story (obviously Linux and Risc-V come to mind, even though the comparison is inappropriate). To some extent, this can be easily explained by one of the above-mentioned factors of oligopoly stability, the EDA users’ reluctancy to switch from a trusted tool to a less trusted/less familiar one. The EDA incumbents, however, offer additional explanations. Rhines underlines the difficulties of the open-source approach in EDA: “A popular expression in the software industry is, ‘when free is too expensive’. Open-source tools require support. Support costs money. Developing the EDA tools for the latest technology requires an ecosystem that is willing to develop freeware without compensation. That’s very difficult in the EDA industry.” KT Moore focuses on the IP protection aspect: “There’s a lot of proprietary information in customers’ designs, some of which they own, and some of which they don’t. (…) Is [open-source EDA] going to be sustainable to manage the intellectual property of either the customer or third parties? And is open-source EDA going to be strong enough to deliver solutions that manage the complexities that our customers are seeing today? When I think of open-source, I think of something that’s a starting point, not a finishing point. And if I adopt open-source EDA for some sort of application, it’s going to require some additional tooling and engineering to make it fit in what I’m trying to get accomplished.” This concludes Part One of our special report on the present and future of the EDA oligopoly. Not surprisingly, most of the opinions we heard so far describe the status quo in a positive way; in Part Two, however, we will address some criticisms and some potential factors of change – again, with the help of Laurie Balch, Harry Foster, David Kanter, KT Moore and Wally Rhines. Topics discussed will include opinions expressed by DARPA in the recent past, the innovation rate ensured by the oligopoly, the ambitious goals of some open-source EDA projects, the role of benchmarks in other hi-tech industries, EDA export restrictions to China, and more. |

|

|

|||||

|

|

|||||

|

|||||