EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. Camera-based driving; new AI chips; NeurIPS; GaAs-on-Si; integrated capacitorsJanuary 17th, 2020 by Roberto Frazzoli

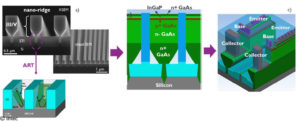

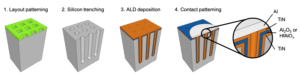

Last week and in late December we focused on the unified programming platforms recently introduced by Xilinx and Intel respectively. This week we try to catch up on some of the major news from the last thirty days or so. Let’s start by briefly mentioning that Facebook is reportedly planning to develop its own operating system. And now, more updates from industry and research. No driver – and no lidar, too CES, of course, was the biggest IT-related event of this new year’s start, and autonomous driving was obviously a major theme in Las Vegas. One of the hurdles delaying this technology is the high number and cost of sensors, such as lidars. But that could change soon: at CES 2020, Mobileye President and CEO Prof. Amnon Shashua discussed ‘Vidar’, Mobileye’s solution for achieving outputs akin to lidar using only camera sensors. He showed a 23-minute video of an autonomous vehicle navigating with camera-only sensors in a complex environment. Also focusing on enhancing camera performance for autonomous driving is the collaboration between ON Semiconductor and Pony.ai – the first company to roll out daily robotaxi operation in China and in California. Tons of new AI silicon New chips announcements abound the AI area, as usual; here’s a very brief summary in random order. Amazon Web Services (AWS) is now offering its customers the access to cloud inference applications based on its internally developed Inferentia chip, reportedly achieving lower latency, higher inference throughput, and lower cost-per-inference compared to Nvidia GPUs. Baidu and Samsung have announced that Baidu’s first cloud-to-edge AI accelerator, Kunlun, has completed its development and will be mass-produced early this year. Nvidia has introduced its Drive AGX Orin, a software-defined platform for autonomous vehicles and robots, powered by a new SoC called Orin which consists of 17 billion transistors and delivers nearly 7x the performance of previous generation Xavier SoC. Syntiant, a start-up specializing in neural processing-based edge voice solutions for battery-powered devices, has announced that its NDP100 and NDP101 ‘Neural Decision Processors’ are being shipped to customers globally. The chips consume less than 140 microwatts. Texas Instruments has introduced the new Jacinto 7 automotive processor platform, with the goal of enabling “mass-market adoption of automotive ADAS and gateway technology.” The first two devices in the platform include specialized on-chip deep learning accelerators. Groq’s giant processor This is a great time for new processing architectures. More details are now available about the AI acceleration chip developed by startup Groq: as discussed by microprocessor expert Linley Gwennap, “Groq has taken an entirely new architectural approach to accelerating neural networks. Instead of creating a small programmable core and replicating it dozens or hundreds of times, the startup designed a single enormous processor that has hundreds of function units. This approach greatly reduces instruction-decoding overhead (…) The result is performance of up to 1,000 trillion operations per second (TOPS), four times faster than Nvidia’s best GPU.” Linley also observed that “One challenge with creating a physically large CPU is that clock skew makes it difficult to synchronize operations. Groq instead allows instructions to ripple across the processor, executing at different times in different units.” Picturing a face from a voice sample Among the latest events of 2019, NeurIPS Conference on Neural Information Processing Systems (December 8 to 14 in Vancouver, Canada) offered many interesting papers describing advances in research on neural networks, on many fronts: biological plausibility, cost reduction, energy reduction, and more. Among them, deep learning algorithms without ‘weight transport’, a feature that is impossible in biological brains; a biologically inspired developmental algorithm that can ‘grow’ a functional, layered neural network from a single initial cell; a method for discovering neural wirings (the wiring of the network is not fixed during training); a paper demonstrating that it is possible to automatically design CNNs which generalize well, while also being small enough to fit onto memory-limited MCUs (2KB RAM); CNNs training strategies achieving energy savings up to 90% while incurring in a minimal accuracy loss; an attempt to guess how the face of a person looks like, based on a sample of his/her voice. Mm-waves calling for higher integration Home of the Stella Artois beer, the Belgian city of Leuven keeps brewing innovation through its Imec research institute. In wireless communication, the shift towards mm-wave bands will require increasingly complex front-end modules combining high speed, high output power and small size. Tackling these challenges, Imec is exploring CMOS-compatible III-V-on-Si technology, looking into co-integration of front-end components (such as power amplifiers and switches) with other CMOS-based circuits. Among early results, Imec has demonstrated the first functional GaAs-based heterojunction bipolar transistor (HBT) devices on 300mm Si, and CMOS-compatible GaN-based devices on 200mm Si. Higher on-chip capacitance Finland-based Picosun has developed a solution for creating silicon-integrated microcapacitors with an areal capacitance up to 1 microFarad per square millimeter. The solution exploits the room available on the bottom of silicon wafers, combining an electrochemical micromachining technology developed at the University of Pisa (Italy) that enables etching of high density trenches with aspect ratios up to 100 in silicon – a value otherwise not achievable with deep reactive ion etching – and Picosun’s ALD (Atomic Layer Deposition) thin film coating technology. Acquisitions Last December 16, Intel announced that it has acquired Habana Labs, an Israel-based developer of deep learning accelerators for the data center, for approximately $2 billion. Synopsys has recently completed the acquisition of Tinfoil Security, a provider of dynamic application security testing (DAST) and Application Program Interface (API) security testing solutions (Mountain View, CA). Synopsys has also agreed to acquire certain IP assets of Invecas (Santa Clara, CA), broadening its DesignWare IP portfolio and adding a team of R&D engineers. Upcoming events Some events taking place over the next few weeks include “The Next FPGA Platform” (January 22, San Jose, CA), a “PowerPoint-free” debate on FPGAs in acceleration applications; DesignCon (January 28-30 in Santa Clara, CA); the tinyML Summit, focusing on ultra-low power Machine Learning at the edge (February 12-13 in San Jose); and ISSCC, International Solid-State Circuits Conference (February 16-20 in San Francisco, CA). |

|

|

|||||

|

|

|||||

|

|||||