EDACafe Editorial Roberto Frazzoli

Roberto Frazzoli is a contributing editor to EDACafe. His interests as a technology journalist focus on the semiconductor ecosystem in all its aspects. Roberto started covering electronics in 1987. His weekly contribution to EDACafe started in early 2019. A more flexible Arm; memristors; record-breaking GPUs; motors into the wheels; and more news from industry and academiaJuly 18th, 2019 by Roberto Frazzoli

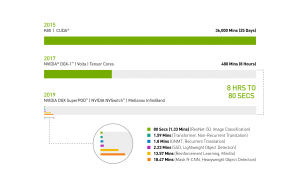

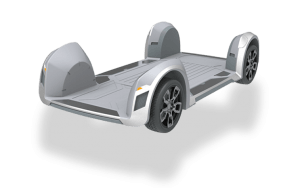

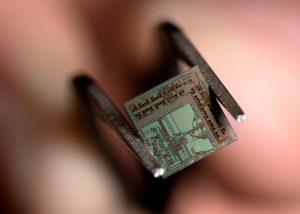

Experimenting with Arm technologies before committing to the manufacturing license fee: this is the new opportunity now offered by Arm. The company has announced it is expanding the ways existing and new partners can access and license its technology for semiconductor design. Called ‘Arm Flexible Access’, the new engagement model enables SoC design teams to initiate projects before they license IP and pay only for what they use at production. This way, design teams will get more freedom to experiment and evaluate different options. As the company explained in a press release, typically partners license individual components from Arm and pay a license fee upfront before they can access the technology. With ‘Arm Flexible Access’ they pay “a modest fee” for immediate access to a broad portfolio of technology, then paying a license fee only when they commit to manufacturing – followed by royalties for each unit shipped. The portfolio made available through this new engagement model includes all the essential IP and tools needed for an SoC design: the majority of Arm-based processors within the Arm Cortex-A, -R and -M families, as well as Arm TrustZone and CryptoCell security IP, select Mali GPUs, system IP alongside tools and models for SoC design and early software development. Access to Arm’s global support and training services are also included. Memristors advancements Researchers at the University of Michigan have built the first programmable memristor processor, or – as it is described in their paper published on Nature Electronics – “a fully integrated reprogrammable memristor–CMOS system for efficient multiply–accumulate operations.” Besides the memristor array itself, the chip integrates all the other elements needed to program and run it. Those components include a conventional digital processor and communication channels, as well as digital/analog converters to interface the analog memristor array with the rest of the chip. As reportedly claimed by the researchers, memristors promise a 10-100 times improvement – in terms of performance and power – over GPUs in machine learning applications, thanks to their in-memory processing capabilities. Nvidia sets new records for training time Waiting for memristor to become a viable alternative for the edge inference applications, GPU leader Nvidia does not sit on its hands: the company has just announced new records for training performance – on the datacenter side – as certified by the new MLPerf v0.6 benchmark suite. “In just seven months since MLPerf debuted with version 0.5, Nvidia has advanced per-epoch performance on MLPerf v0.6 workloads by as much as 5.1x overall,” said Dave Salvator a Senior Product Marketing Manager at Nvidia’s Tesla group, in a blog post on the company’s website. An epoch, as Salvator explains, “is one complete run of passing the data set through the neural network. It’s important to consider per-epoch performance improvements, since the number of epochs needed to converge networks and required accuracy levels can change with each successive version of the network.” An example of the advancements that the company has achieved over the past two years has been provided by Paresh Kharya, Director of Product Marketing for Accelerated Computing at Nvidia, in another blog post on the company’s website: “In spring 2017, it took a full workday — eight hours — for an Nvidia DGX-1 system loaded with V100 GPUs to train the image recognition model ResNet-50. Today an Nvidia DGX SuperPOD — using the same V100 GPUs, now interconnected with Mellanox InfiniBand and the latest Nvidia-optimized AI software for distributed AI training — completed the task in just 80 seconds.” Putting the motor into the wheel Besides autonomous driving, another major trend has the potential for disrupting the long-established automotive industry foodchain: electrification. Disruption, in this case, could start from the mechanical architecture of the vehicle. Ree, an Israeli startup, is developing a solution where the motors, steering, suspension, drivetrain, sensing, brakes, and electronics are all integrated into the wheel. This allows Ree to create a flat “skateboard chassis platform” – containing batteries – that reduces a vehicles space and weight and increases efficiency. The idea of integrating the engine in the wheel is not new; a similar concept is used – for example – with hydraulic motors in earthmoving machines and similar equipment. Electric vehicles are already simpler than their gasoline or diesel counterparts from a mechanical standpoint, as they can get rid of the change-speed gearbox. Integrating electric motors into the wheels takes mechanical simplification to the next level, and the resulting architectural change could potentially have an impact on the entire automotive ecosystem. Ree claims to be supported by world leading Tier1s and automakers, so we can expect interesting developments. Will wireless replace fiber-optic cables? At 36-gigabit per second, could wireless transmission one day replace fiber-optic cables? A team from University of California, Irvine, is suggesting this future scenario; at present the transceiver chip they designed has reportedly achieved this data rate on a short distance, but research continues. The device, fabricated with the help of TowerJazz and STMicroelectronics, operates in the 100 gigahertz frequency range. To achieve its data rate, it “significantly relaxes digital processing requirements by modulating the digital bits in the analog and radio-frequency domains”. According to a member of the UCI team, this innovation “eliminates the need for miles of fiber-optic cables in data centers, so data farm operators can do ultra-fast wireless transfer and save considerable money on hardware, cooling and power.” IBM acquisition of Red Hat Last July 9, IBM announced the acquisition of Red Hat for $34 billion. With this move, Big Blue aims to position itself as the leading hybrid cloud provider. “Red Hat is going to stay neutral”, said Arvind Krishna, Senior Vice President of IBM Cloud & Cognitive Software, commenting in a blog post on the future of Red Hat’s commitment to open source technologies such as Linux and Kubernetes. IBM itself pledged its support to the open source community: “There will be an increase in terms of the investment that goes into open source. Red Hat will be able to directly leverage the work that IBMers do in these communities. As a result, a lot more will go back to the upstream community”, Krishna added in the same blog post. |

|

|

|||||

|

|

|||||

|

|||||