Hardware Emulation Journal Jean-Marie Brunet

Jean-Marie Brunet is the Vice President and General Manager of Hardware Assisted Verification at Siemens EDA. He has served for over 25 years in management roles in marketing, application engineering, product management and product engineering roles in the EDA industry, and has held IC design and … More » Using Hardware Emulation to Verify AI DesignsMarch 6th, 2019 by Jean-Marie Brunet

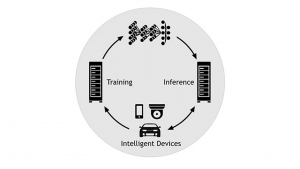

You can’t turn around these days without seeing a reference to AI – even as a consumer. AI, or artificial intelligence, is hot due to the new machine-learning (ML) techniques that are evolving daily. It’s often cited as one of the critical markets for electronics purveyors, but it’s not really a market: it’s a technology. And it’s quietly – or not so quietly – moving into many, many markets. Some of those markets include safety-critical uses, meaning that life and limb can depend on how well it works. AI is incredibly important, but it differs from many other important technologies in how it’s verified. Three Key RequirementsAI/ML verification brings with it three key needs: determinism, scalability, and virtualization. These aren’t uncommon hardware emulation requirements, but many other technologies require only two out of those three. AI is the perfect storm that needs all three. ML involves the creation of a model during what is called the “training phase” – at least in its supervised version. That model is then implemented in a device or in the cloud for inference, where the trained model is put to use in an application.

Application of AI/ML designs The training is very sensitive. From a vast set of training examples, you’ll derive a model. Change the order of the training samples by even one, and you’ll get a different model. That different model may work just fine – that’s one of the things about ML; there are many correct solutions. Each may arrive at the same answer, but the path there will be different. AI training techniques include ways of ensuring that your model isn’t biased towards one training set, but the techniques all involve a repeatable set of steps and patterns for consistent results. Because you can’t verify a model that keeps changing. Likewise, during verification, the test input patterns must remain consistent from run to run. If you try to take, for example, random internet data from a network using in-circuit emulation (ICE) techniques for use in testing AI models in a networking application, you’re never going to completely converge across design iterations, since you can’t compare results from run to run. This drives the need for determinism. AI models themselves involve large numbers of small computations, typically performed on a large array of small computing engines. Their data requirements are different from those of many other applications, changing the way storage is built and accessed. And computation may be done in a cluster for a given model, but an application may have many such models, resulting in an overall fragmented design. During development, models can grow much larger as they’re optimized and trained on the full range of inputs that they might see. This means that, over the course of a given project – and particularly when a past project is built on a new project – the verification platform must grow or shrink to accommodate the wide range in resources required over the lifespan of the projects – while minimizing any effect on performance. This drives the need for scalability. Finally, AI algorithms are new; there is no legacy. That means that, even if you wanted to use ICE, there are few sources of real data from older design implementations that could be used to validate a new implementation. This is all new stuff. As a result, we must build a virtual verification environment. Even if we could use ICE, a virtual environment is still preferable. During debug, for example, you can’t stop an ICE source’s clock. You may stop looking at data, but the source keeps moving without you. By contrast, a virtualized data source is virtual in all regards, including the clock. So you can stop a design at a critical point, probe around to see what’s happening, and then continue on from the exact same spot. This helps the determinism that we already saw we need. And so this drives the need for virtualization. Veloce CharacteristicsThese three requirements – determinism, scalability, and virtualization – align perfectly with Veloce emulators’ three key pillars.

In ConclusionArtificial intelligence and ML is forcing us to think in new ways about design and verification. Veloce emulators, already important for many of the markets into which AI is moving, will be an even more important tool in ensuring that your AI-enabled projects get the verification they critically need in a timeframe that gets you to market on time. Tags: AI, AI verification, artificial intelligence, ML verification Category: Mentor |

|

|

|||||

|

|

|||||

|

|||||