Agnisys Automation Review Anupam Bakshi

Anupam Bakshi is Chief Executive Officer (CEO) for Agnisys, Inc., the pioneer and industry leader in Golden Executable Specification Solutions™. From his early days at Gateway Design Automation, through to his time at Cadence, PictureTel, and Avid Technology, he has been passionate about … More » Functional Safety and Security in Embedded SystemsNovember 17th, 2020 by Anupam Bakshi

Electronics in general, and embedded systems in particular, become more critical every day. There is hardly a single aspect of our lives that is not controlled, monitored, or connected by embedded systems. Even adventurers exploring the most remote regions of our planet carry satellite phones for emergency contact. The ever-increasing role of electronics places huge demands for functional safety and security in the chips and systems we design. I’d like to explore these two topics a bit and recommend that you view a webinar that we recorded earlier this year for a deeper dive. Let me start by differentiating the two terms, especially since “safety” and “security” tend to be used almost interchangeably in everyday speech. Functional safety has a specific meaning when applied to electronics and embedded systems: a measure of the system behaving correctly in response to a range of failures. One commonly cited example of such a failure is an alpha particle flipping a memory bit. If this occurs in safety-critical logic, the design must include a mechanism to detect the failure and correct it if possible. Other failure examples include human error, environmental stress, broken connections, and aging effects.

Embedded systems security is quite different. At a high level, security means that no private information can leak out and that no malicious agent can get in to control the system. Proper security is critical for system authentication, confidentiality, integrity, non-reproduction, and access control. Although security is often considered as a software topic, thanks to well-publicized exploits using buffer overflows, it is equally important for hardware. This was confirmed dramatically with the 2018 disclosure of the Meltdown and Spectre vulnerabilities in many common microprocessors. Functional safety and security are clearly important in many applications. Autonomous vehicles are a good example; no one wants a bit-flip to cause a car to steer into opposing traffic, or for an evil hacker to take control and deliberately cause a crash. Nuclear power plants, military systems, and implanted medical devices are a few other applications where both functional safety and security are life-or-death requirements. Satellites and other space-borne applications have elevated safety risk due to cosmic rays. Security is a major concern for Internet-of-Things (IoT) devices since these tend to be installed by individuals with no training on proper configuration. I could cite many such examples. In addition to meeting the needs of their target markets, many applications must also satisfy professional standards. Security is a relatively new areas for standardization, so there’s not much in place yet. However, there are several widely adopted standards for functional safety, including:

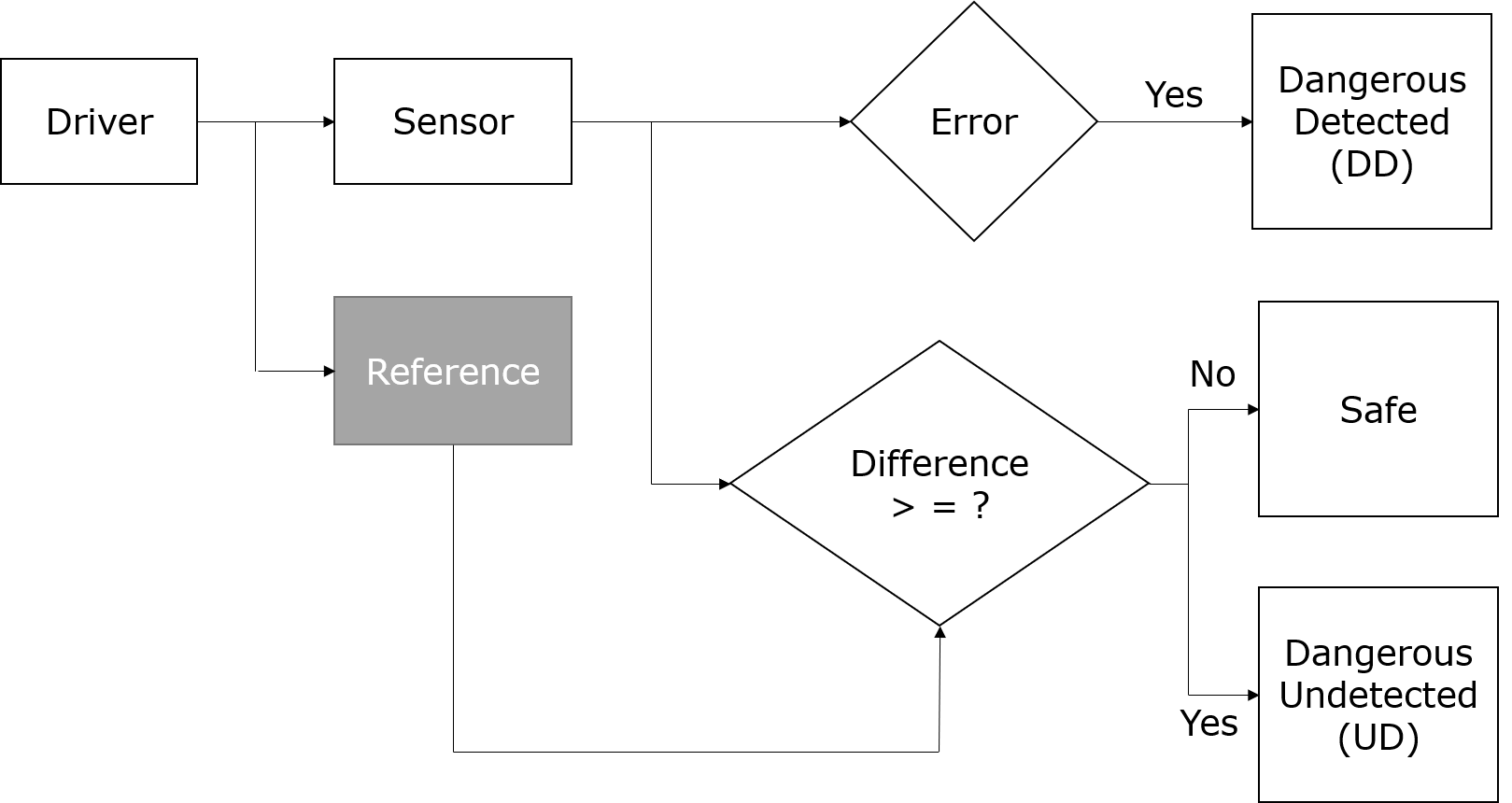

So, what does a hardware designer do to build embedded systems that are both secure and functionally safe? The answer is simple: use well-established techniques to guard against problems, detect them if they do occur, and take appropriate corrective action. Of course, the implementation of these techniques may be a lot harder than this simple answer. For functional safety, a fault in critical logic should be detected. If the fault is not detected, and it does not cause any deviation from the expected (reference) behavior, then it is safe. However, an undetected fault that causes a deviation is a serious concern since it could cause improper operation or even system failure.

Figure 1: Flowchart for Safe Design Over the years, designers have developed several techniques for detecting and sometimes correcting faults that might compromise functional safety. These include:

There are also several standard techniques to provide security for embedded systems at the hardware level, including:

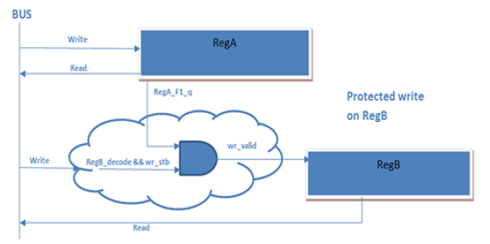

Figure 2: Representation of a Lock Register Of course, there are a lot of implementation details behind these two lists. To find out much more about these techniques, I invite you to sign up for our webinar here. Both functional safety and security are critical topics for embedded systems in many applications, so learning how to design hardware properly is important. I think you’ll find the information interesting and valuable. |

|

|

|||||

|

|

|||||

|

|||||